Jessy Lin

Hi!

I’m a final-year PhD student at Berkeley AI Research, advised by Anca Dragan and Dan Klein. My research is supported by the Apple Scholars in AI Fellowship. I’m also a visiting researcher at Meta AI.

My goal is to build AI that can augment humans: making people smarter and able to accomplish things they couldn’t do before. People often don’t know exactly what they want or how they want it done, so we’ll need collaboration — working together to synthesize human context with model intelligence.

I’ve worked on:

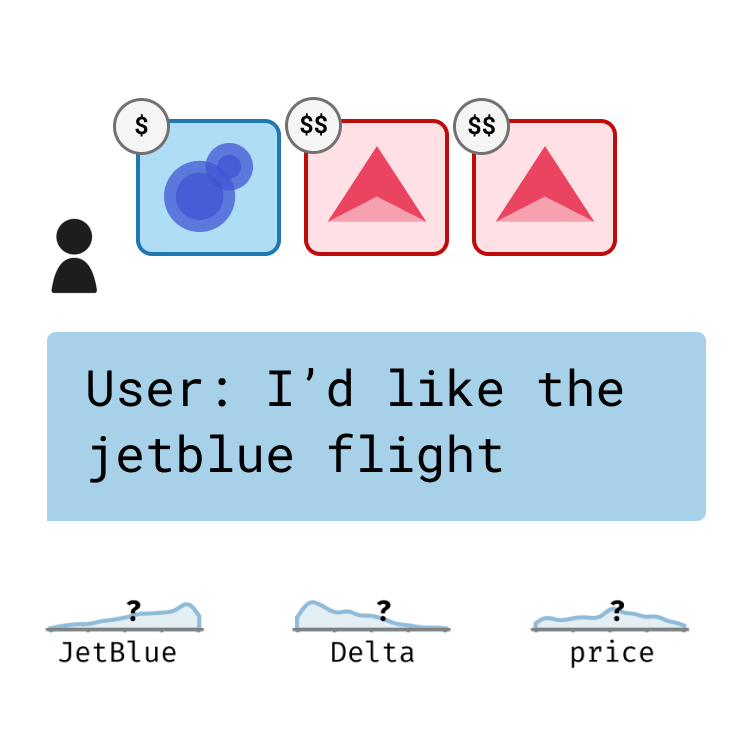

- training objectives that make models fundamentally more interactive (multimodal agents, coding)

- environments for collaboration (decision-making, preference elicitation)

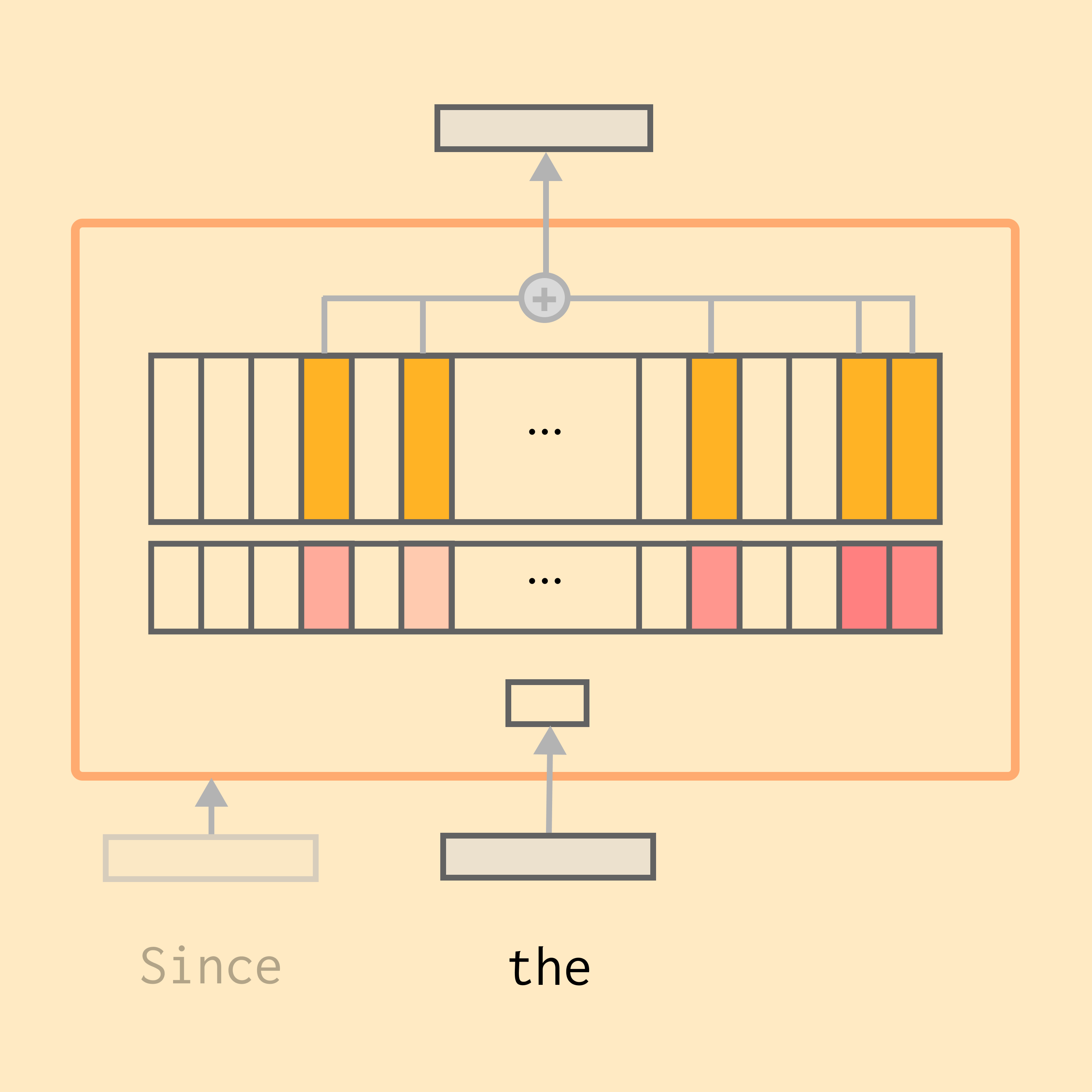

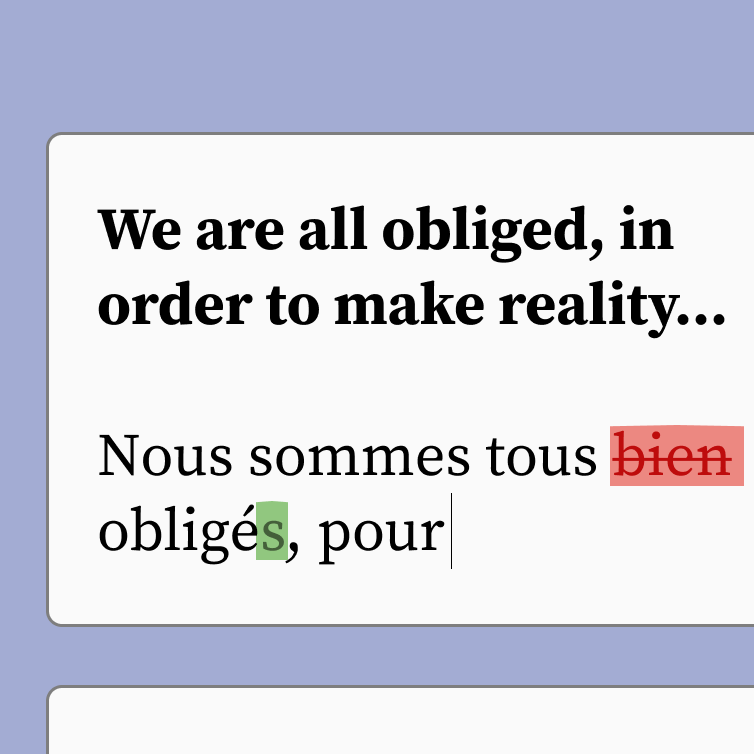

- continual learning & memory (synthetic data, memory architectures, Lilt)

Previously, I worked on research and product at Lilt, where I worked on continual adaptation and human-in-the-loop machine translation (Copilot for expert translators). I graduated with a double-major in computer science and philosophy from MIT, where I did research on human-inspired AI with the Computational Cognitive Science Group, advised by Kelsey Allen and Josh Tenenbaum, and machine learning security as a founding member of labsix. I also spent a great summer with the Natural Language Understanding group at Google Research NY working on long-context memory architectures.

Research

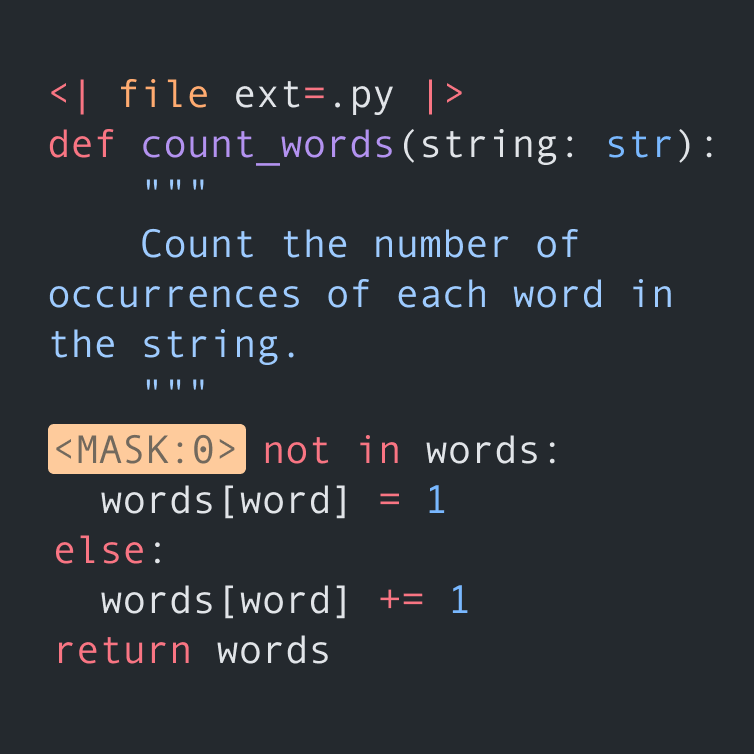

InCoder: A Generative Model for Code Infilling and Synthesis

ICLR 2023 (spotlight, top 6%)

We open-source a new large language model for code that can both generate and fill-in-the-blank to do tasks like docstring generation, code rewriting, type hint inference, and more.

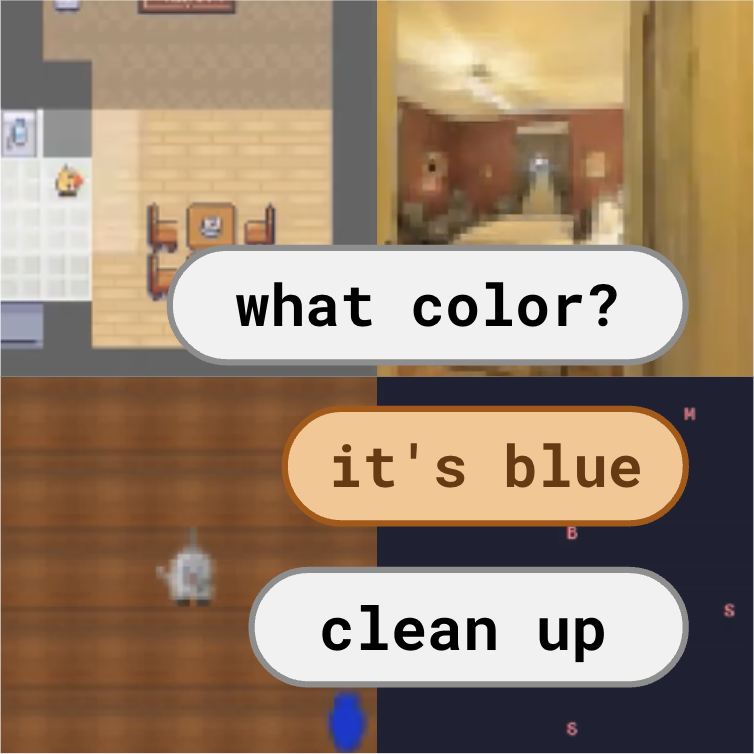

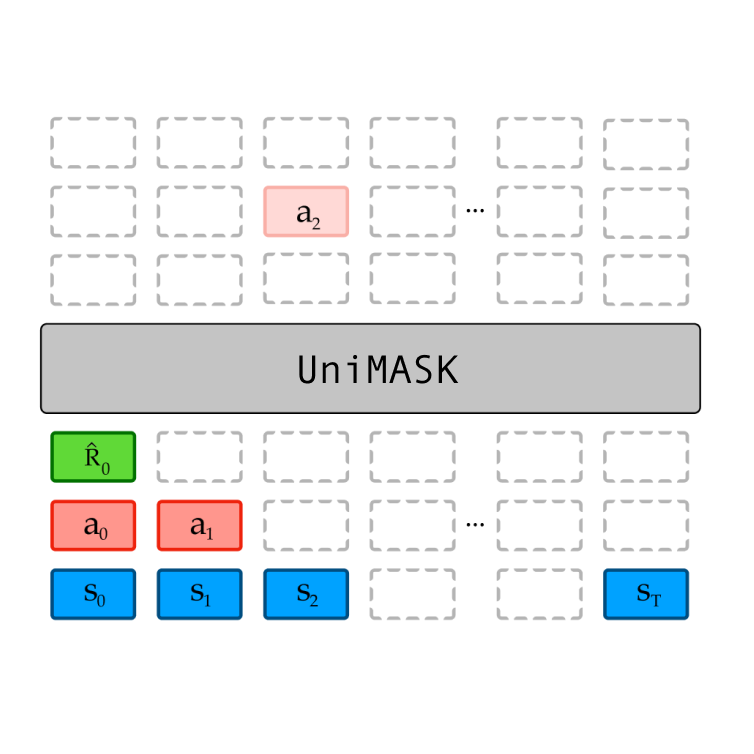

UniMASK: Unified Inference in Sequential Decision Problems

NeurIPS 2022 (oral, top 1.8%)

We show how a single model trained with a BERT-like masked prediction objective can unify inference in sequential decisionmaking settings (e.g. for RL): behavior cloning, future prediction, and more.